Insider risks can no longer be ignored

$

M

in costs

average annual cost of insider-led cyber incidents (2025 Ponemon Cost of Insider Risks Global Report)

%

involve humans

Eighty five percent of breaches involved human interaction (Verizon 2021 Data Breach Investigation)

days to contain

Insider incidents take an average of 81 days to contain and every additional day, costs you more.

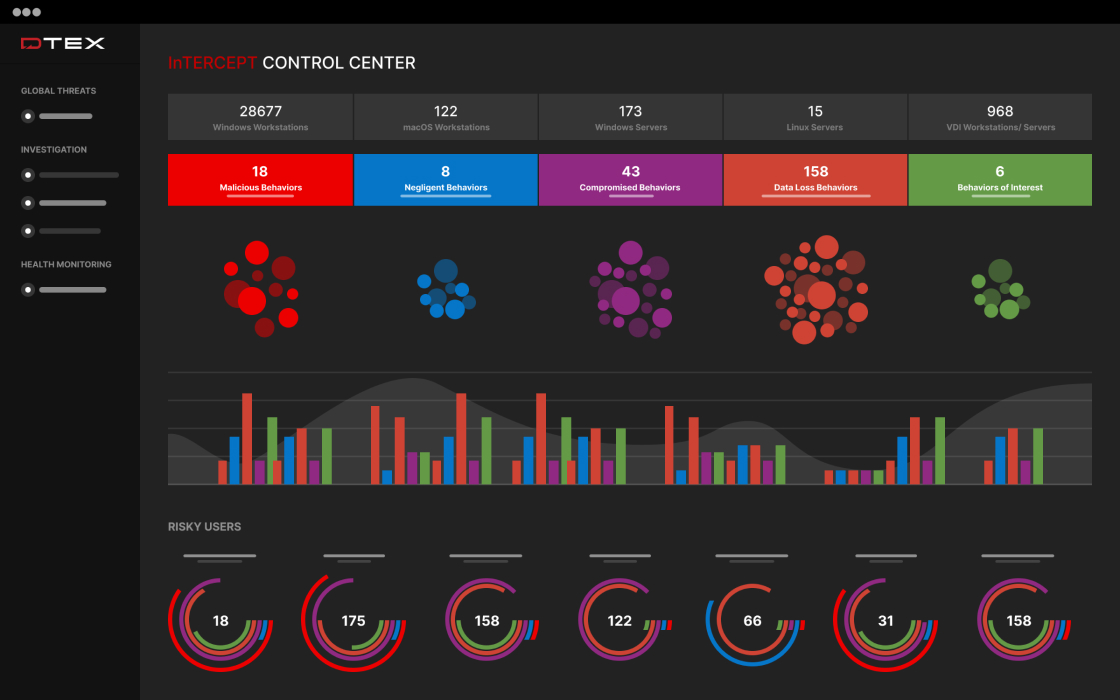

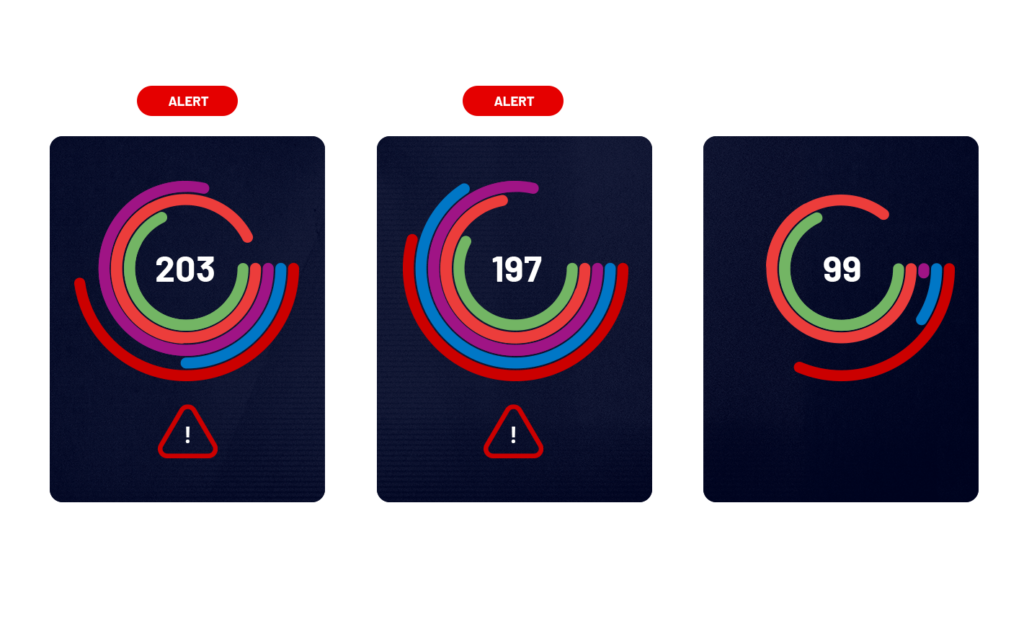

DTEX prevents risks from becoming costly threats

Predictive, proactive, preventative

DTEX analyzes activity, trends, and behavior to provide actionable intelligence and early warning signs of insider threats. Quickly answer Who, What, When, Where, and How. Detect and automate responses to prevent data loss or theft.

Privacy to address multiple use cases

Lightweight and scalable

Cutting edge research & intelligence

KEY RESOURCES

Ponemon 2025 Cost of Insider Risks Global Report

Independently conducted by the Ponemon Institute.

Customer Insights

Hear from Melissa, Lead at the Threat Management Center at Verizon, talk about her experience with DTEX as an insider risk management tool.

She touches on DTEX’s ability to deploy both on-prem and in cloud at mass scale, dynamic risk scoring, and behavioral analytics. She also offers advice to those looking to stand up an insider risk management program.

Ready to get started?