- Generative AI agent use monitoring

- AI use policy and enforcement

- Sensitive data classification

- Allow listing

INTRODUCTION

AI agents are rapidly gaining traction as major technology companies introduce their own versions to the market. These tools are often perceived as “magical black boxes” that promise to streamline workflows, sharpen focus, and simplify complex tasks. While the core technologies behind these agents are not entirely new, the abstraction layer they introduce changes how users interact with them. This shift requires organizations to reassess how they manage the risks associated with AI integration, especially as these tools become more embedded in daily operations.

One of the key challenges is distinguishing between actions taken by human users and those initiated by AI agents. This ambiguity has serious implications for auditing, accountability, and compliance, as it becomes harder to trace the origin of decisions or actions. Additionally, there are growing concerns around data exposure. Users may unknowingly share sensitive or proprietary information with AI agents, often without a clear understanding of what data is safe to disclose. Prompt injection attacks further complicate the landscape, as malicious actors can manipulate inputs to extract confidential data or alter an agent’s behavior, exploiting the agent’s interpretive flexibility and limited contextual awareness.

This Insider Threat Advisory (iTA) aims to educate on the risks tied to AI agent usage and the tools available to monitor and manage them. It will cover current telemetry and logging capabilities, methods for identifying AI-generated actions, and best practices for data governance and prompt hygiene. The discussion will also look ahead to future developments in AI observability and control, helping organizations better assess and adapt their threat profiles in an evolving technological environment.

Some parts of this Threat Advisory are classified as “limited distribution” and are accessible only to approved insider risk practitioners. To view the redacted information, please log in to the customer portal or contact the contact the i³ team.

DTEX INVESTIGATION AND INDICATORS

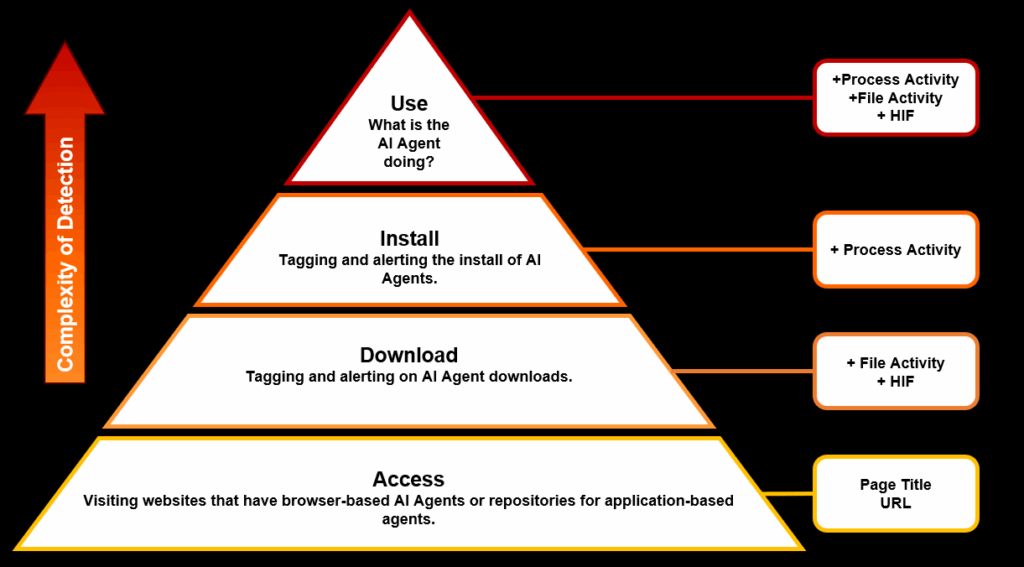

We will break down the investigation stages and three use cases that we described in the introduction but first we wanted to frame the difficulty of detection into their distinct areas which will helps the discussion as we show how DTEX can detect on each stage.

As we travel up the pyramid it becomes more difficult to detect AI agents and DTEX requires more functions and modules to accurately tag and alert on activity. For example, access will be tracked through web page accessed activity, however moving to the Use in the context of browser-based AI agents organizations will require HTTP Inspection Filtering rules profiled and enabled.

Stage: Reconnaissance

complexity: access, download

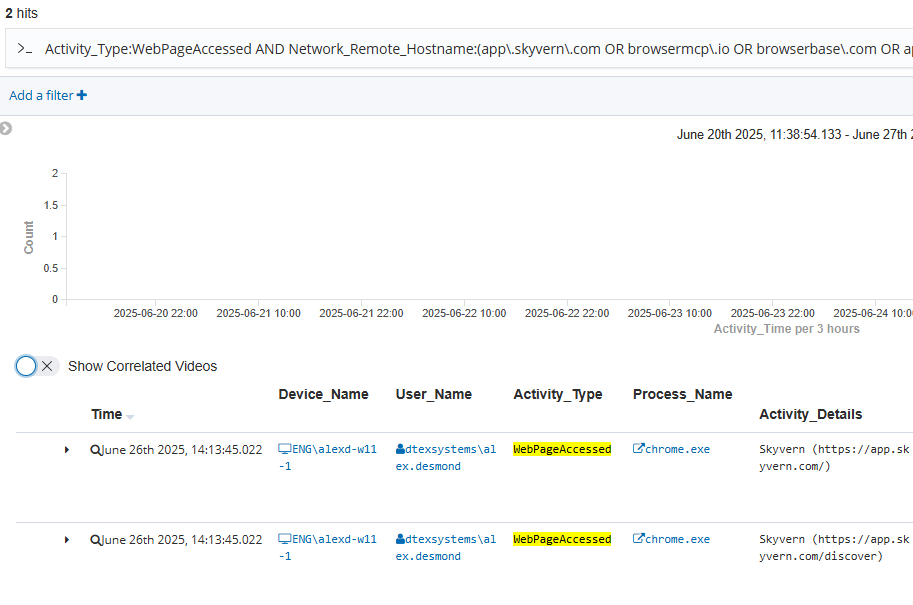

Most browser-based AI agents provide a dedicated sandbox environment — typically accessible via a separate URL — where users can input instructions to automate tasks. Monitoring access to these portals can offer valuable insights into the types of AI-driven automation being explored within an organization, helping to assess potential threat vectors and exposure.

Stage: Obfuscation

complexity: install, use

AI agents which can be used on the endpoint will need an executable and framework to perform the user related functions. This is not something that is new rather AI has just been placed on top of already existing automation frameworks. One of the more popular ones utilizes Python which has functions to simulate things like mouse clicks and keyboard inputs. OpenAI has then added functionality in the form of the agent to be able to query the OpenAI API in so that, theoretically, a user can input a task in text and the AI would convert the text into actions for the agent to perform.

In this example we’re basing the research off OthersideAI GitHub repository. This GitHub can also be found from the HyperWrite | AI Writing Assistant website where the company would do the set up for the user and then provide a downloadable agent with the configurations applied.

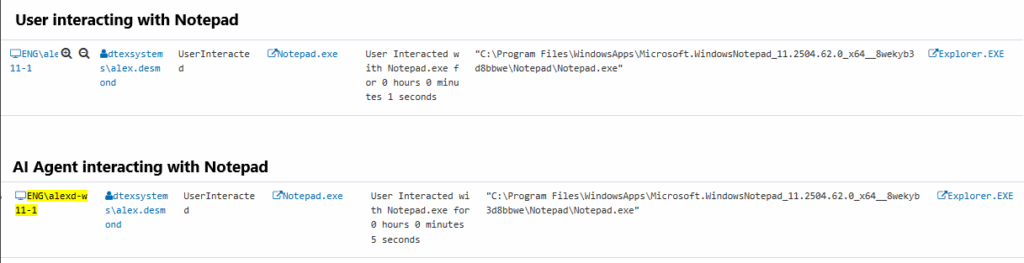

The main purpose of this agent is to assist with content generation and as seen in the example it can open file explorer and applications like notepad to then enter the content in. The activity itself is the same as what you would see for a user including the parent process.

The telltale signs will be the operate process spawning a python process. The activity between process execution and termination will be the activity that the agent is then performing. In this case the agent is on the endpoint and not sandboxed so the user will not be able to perform other activities while it is running unless it was something programmed in, like allowing the user to solve a Captcha.

For additional detection mechanisms we can also use Focused Observation to capture activity when the operate.exe process spawns and terminates.

Stage: Exfiltration

complexity: access, use

In this example, we demonstrate the use of a browser-based AI automation agent that offers free credits, with additional functionality available on a pay-per-step basis. The use case illustrates how a malicious actor could leverage such an agent to exfiltrate data from an organization with minimal footprint — particularly in environments lacking HTTP inspection. We then contrast this with what becomes visible when HTTP inspection is enabled, highlighting the critical role of network visibility in detecting and mitigating such threats.

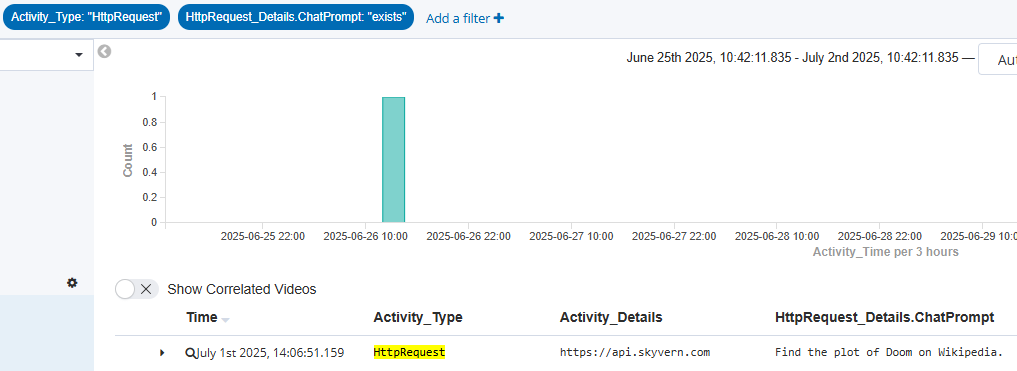

In this specific example with the profiling the first step would be to review the user chat prompts and gain context and understanding of the tasks that are being asked.

Now that we have covered some of the practical examples let us dive into the use cases that are present for the use of AI agents.

Use case #1: agent vs. human activity

One of the most pressing concerns for organizations adopting AI agents is the growing uncertainty around task attribution. Specifically, leaders are asking:

“Is this task being performed by my employee — or by an AI agent acting on their behalf?”

This ambiguity introduces a range of operational, ethical, and security risks that organizations must proactively address to maintain trust, accountability, and compliance.

Key issues that can arise:

- Overemployment or ghost work. Employees may use AI agents to fulfill multiple roles across different organizations simultaneously, without disclosing their true workload or availability. This can lead to conflicts of interest, reduced productivity, and reputational damage if discovered. It also raises questions about fairness and transparency in the workplace.

- Time theft. When AI agents are completing tasks autonomously, it becomes difficult to verify whether employees are actively engaged or simply delegating their responsibilities. Traditional time-tracking and performance evaluation systems may no longer provide an accurate picture of employee contributions, undermining trust and accountability.

- Audit and attribution challenges. Without clear visibility into whether a human or an AI performed a given action, it becomes difficult to assign responsibility for decisions, errors, or breaches. This is particularly problematic in regulated industries where accountability and traceability are non-negotiable.

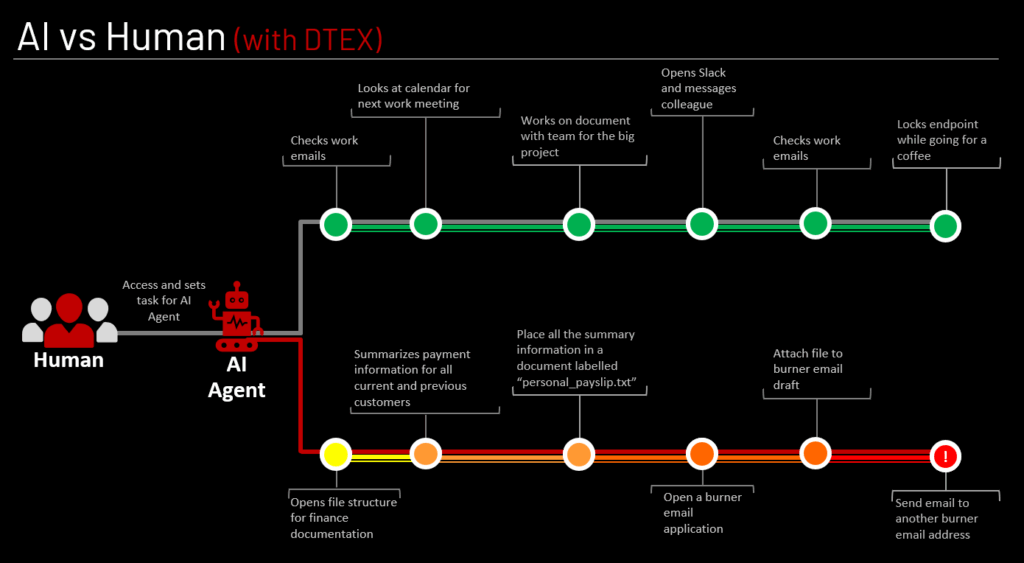

The above figure illustrates an example stitched together from our experience while researching various tools and is something that can likely occur. The human can give an AI agent commands to perform Research, Aggregation, and Exfiltration all while still performing their own work tasks. This level of Obfuscation should be of concern for all organizations. As was demonstrated in the examples above without monitoring, detection and alerts in place a majority of this type of activity could go undetected thus allowing data to walk right out the door.

We predict that this will not appear as an all-in-one solution, rather malicious insiders will use multiple AI infused tools to allow them to perform actions in order to achieve their goals.

Use case #2: data exposure risks

Both AI agents and humans using generative AI chat websites (like ChatGPT, Gemini, or Claude) can expose sensitive company information, but the mechanisms, scale, and visibility of the risks differ in important ways. Here’s a breakdown of how their data exposure risks are similar and how they diverge:

Similarities in data exposure risks

- Unintentional disclosure

- Both humans and AI agents can inadvertently share sensitive data (e.g., customer records, internal documents, credentials) when interacting with generative AI tools.

- This often happens when users or agents are unclear about what data is safe to share or assume the tool is operating in a secure, isolated environment.

- Third-party data handling

- In both cases, data may be sent to external servers (e.g., OpenAI, Anthropic), raising concerns about data residency, retention, and compliance with regulations like GDPR or HIPAA.

Key differences in risk profile

Aspect |

Human Using Chat Website |

AI Agent |

|---|---|---|

Control & Awareness |

Users typically know they are interacting with an external tool and may exercise caution. |

Agents operate autonomously and may not distinguish between sensitive and non-sensitive data. |

Scale of Exposure |

Limited to individual sessions or queries. |

Can operate at scale, accessing multiple systems and datasets continuously. |

Auditability |

Easier to trace actions to a specific user and session. |

Harder to attribute actions — especially if the agent is embedded in workflows or tools. |

Policy Enforcement |

Users can be trained and policies enforced through access controls. |

Agents require embedded guardrails and runtime monitoring to enforce policies. |

Persistence |

Human interactions are often one-off. |

Agents may persist across sessions, increasing the risk of long-term exposure or memory-based leakage. |

When considering the scale of data exposure, it’s important to recognize that the risk extends beyond accidental leaks or misconfigurations. AI agents can also amplify the capabilities of threat actors or malicious insiders, making it significantly easier and faster for them to conduct harmful activities such as reconnaissance, data aggregation, and exfiltration.

Unlike traditional tools, AI agents can autonomously navigate systems, interpret unstructured data, and synthesize information across multiple sources. This means a malicious actor could potentially leverage an AI agent to map out internal systems, identify sensitive data, and compile it into usable formats — all with minimal effort and in a fraction of the time it would take manually.

AI agents often operate with broad access and limited oversight, they can unintentionally assist in these activities without triggering traditional security alerts.

Use case #3: LLM vulnerabilities, injection prompt attacks

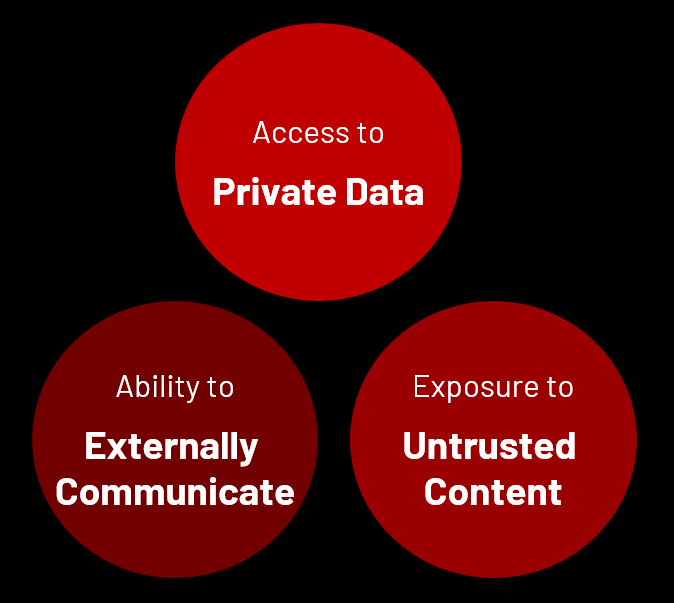

We want to introduce a concept penned by Simon Willison known as the Lethal Trifecta [1].

This risk applies to any individual or system that can be manipulated through prompt injection attacks. As highlighted Simon’s blog series, this isn’t a challenge organizations can expect vendors to solve — especially when multiple tools are integrated into a single solution. To illustrate, consider an organization using an AI agent to summarize LinkedIn profiles during the hiring process. If a candidate embeds a crafted prompt in their profile, the agent could be tricked into executing unintended actions, such as leaking internal data or altering its output. This example shows how easily prompt injection can compromise workflows if not properly mitigated.

While specific configurations are required for an LLM or AI agent to be vulnerable, this example highlights how prompt injection becomes a serious risk — especially when organizations enable what we call the Lethal Trifecta.

Profiles and Personas

Profiles are helpful for describing an incident clearly, offering all relevant details in a single, easy-to-read table. While this iTA does not include a specific profile, readers can refer to our previous iTA-25-03 Insider Sabotage: When Trusted Hires Turn Rogue for an example of how profiles are structured.

The personas in this section are developed specifically within the context of an AI agent being deployed and utilized in an organizational environment.

Persona(s)

These personas are designed to help organizations conceptualize and differentiate threat-hunting strategies. By separating these behavioral patterns, teams can proactively detect and respond to risks without needing to replicate the exact scenario through technical emulation.

Persona |

Description |

Factors |

|---|---|---|

Departing Employee |

This could involve an individual preparing to exit the company, potentially aggregating as much proprietary data as possible — an activity that also overlaps with behaviors seen in AI-powered notetaking applications. |

|

Malicious Insider |

The malicious insider can have a range of motivations, and for simplicity, we’re including The Compromised Insider — where an external threat actor has taken control of a legitimate account — under the same persona. |

|

The Over-Privileged User |

AI agents operate with the same permission level as the user who runs them — unless explicitly restricted. Over-privileged users could configure agents to perform tasks on servers where they hold admin rights, potentially leading to data breaches or creating new attack surfaces. |

|

Recommended Actions

DTEX Platform Detection Multipliers

To enhance detection visibility and monitoring within their environments, organizations can implement specific strategies using DTEX.

- Focused Observation. Focused Observation is the overarching capability to enhance monitoring on specific users.

- HTTP Inspection Filtering. Enabling the entire range of rules provides a much more definitive view of a user’s behaviors. HTTP inspection provides comprehensive context for effective monitoring and analyzing AI usage within the organization. This was pivotal in this investigation by helping investigators focus on servers for investigation.

- GenAI Dashboard. Summarizes the information collected from the HTTP Inspection Filtering rules for easier triage. This includes users’ activity on websites and chat prompts entered if the website has a rule.

Early Detection and Mitigation

To mitigate risks associated with both human and AI agent interactions, organizations should implement robust data classification, redaction, and data loss prevention (DLP) tools to protect sensitive information. For human users, it’s essential to provide training on the safe and responsible use of AI technologies and to restrict access to unauthorized external AI tools. For AI agents, security can be strengthened through sandboxing, strict access controls, and the deployment of observability tools that enable real-time monitoring of agent behavior to detect and respond to anomalies promptly.

Generative AI agent use monitoring

The ability to monitor and review the insider’s conversation history proved to be a pivotal turning point in the investigation. In the absence of direct cyber indicators linking the individual to server activity, these conversations provided critical context and behavioral evidence.

This insight enabled investigators to escalate the priority of the incident and strategically shift focus toward the organization’s crown jewels—its most sensitive and high-value assets. The use of conversational data as a behavioral signal highlights the growing importance of cross-domain visibility in insider threat investigations.

AI use policy and enforcement

Much like this organization, we are increasingly seeing the implementation of clear AI usage policies accompanied by employee training programs aimed at mitigating AI-related risks. These efforts align closely with the recommendations outlined in the i³ Threat Advisory: AI Note-Taking Tools for Data Exfiltration.

However, the next critical step for organizations is moving beyond policy and education toward enforcement mechanisms. This can take the form of active allowlisting of approved AI tools or the development of a structured “teachable moments” program that reinforces responsible AI use through real-time feedback and guidance.

Both approaches can be effectively informed and supported by insights from the Generative AI Utilization Dashboard, which provides visibility into usage patterns, tool diversity, and potential policy violations — enabling data-driven enforcement and continuous improvement.

Sensitive data classification

To effectively protect sensitive information, organizations must first identify, and label data based on its sensitivity and business value. This begins with a data discovery process, using automated tools to scan across endpoints, servers, cloud storage, and databases. These tools detect patterns like Social Security numbers, credit card data, or proprietary code, and apply classification labels such as Confidential, Restricted, or Public.

Next, organizations should conduct a business impact analysis to determine which data assets are mission-critical — these are the crown jewels. Examples include intellectual property, customer databases, financial records, and strategic plans. These assets are not just sensitive — they are essential to the organization’s survival and competitive advantage.

Once identified, crown jewel data should be tagged with the highest classification level, and protected with enhanced controls such as encryption, strict access policies, and continuous monitoring. This classification process should be dynamic and context-aware, adapting as data moves, changes, or is accessed by different users.

Allow listing

Application allowlisting is a critical security control that limits execution to only approved software, reducing the number of applications that require monitoring and threat detection. This minimizes the attack surface and simplifies security operations.

Most applications prioritize functionality over security, even when controls are available. Allowlisting mitigates this risk by blocking unauthorized or potentially harmful software from running.

This practice is widely recommended across cybersecurity assessments and frameworks. It strengthens the overall security posture by preventing malware, reducing insider threats, and supporting compliance with industry standards.

INVESTIGATION SUPPORT

For intelligence or investigations support, contact the DTEX i3 team. Extra attention should be taken when implementing behavioral indicators on large enterprise deployments.

Get Threat Advisory

Email Alerts