- Generative AI Conversation Monitoring

- AI Use Policy and Enforcement

- Monitoring and Detection on Crown Jewels

- Integration of Additional Data Feeds and Teams

INTRODUCTION

Engineers, across various disciplines and levels, hold substantial access to critical systems and possess advanced technical expertise that is central to an organization’s operations and value creation. This privileged position, while essential for innovation and efficiency, also presents a significant security risk. If an engineer were to misuse their skills with malicious intent—whether through data theft, system sabotage, or unauthorized access—the consequences could be devastating, potentially leading to operational disruption, financial loss, and reputational damage. [1][2][3]

In collaboration with affected organizations, DTEX has observed post-termination activity by DPRK-affiliated IT workers (ITWs) targeting critical infrastructure within a blockchain entity. Following their dismissal, these individuals attempted to regain access to the organization’s main database and execute malicious code intended to sabotage the system. The attempt was unsuccessful due to the timely revocation of access privileges by the employer.

This incident aligns with broader patterns of activity attributed to DPRK cyber operations. Notably, the DPRK-linked threat group APT45, also known as Andariel, has a documented history of destructive and sabotage-oriented cyber campaigns. This group is closely associated with ITW operations and has demonstrated capabilities that extend beyond espionage into direct system disruption.

One prominent example includes the attempted compromise of India’s Kudankulam Nuclear Power Plant, where malware containing hardcoded internal credentials was discovered. Although the attack was ultimately thwarted, it underscores the potential severity of such operations. Another significant campaign attributed to this group is the DarkSeoul attack, which targeted South Korean media and financial institutions, disabling over 48,000 systems in a coordinated destructive effort.

Key Aspects of Sabotage in Insider Risk

Sabotage refers to intentional actions taken by individuals within an organization to disrupt, damage, or destroy its operations, assets, or reputation. From an insider risk perspective, sabotage is particularly concerning because it involves individuals who have legitimate access to the organization’s resources and systems, making it more challenging to detect and prevent.

- Motivation: Individuals may engage in sabotage for various reasons, including personal grievances, ideological beliefs, financial gain, coercion/blackmail, or competitive advantage. Understanding the motivations behind sabotage is crucial for organizations to mitigate risks effectively.

- Access and Knowledge: Insiders typically possess a deeper understanding of the organization’s processes, systems, and vulnerabilities. This knowledge allows them to exploit weaknesses more effectively than external threats, making sabotage more damaging.

- Types of Sabotage:

- Data Destruction: Deleting or corrupting critical data, which can lead to operational disruptions and loss of valuable information.

- System Disruption: Intentionally causing system failures or downtime, which can halt business operations and impact productivity.

- Intellectual Property Theft: Stealing proprietary information or trade secrets to harm the organization or benefit a competitor.

- Reputational Damage: Engaging in actions that tarnish the organization’s public image, potentially leading to loss of customer trust and business opportunities.

DTEX PLATFORM INVESTIGATION & INDICATORS

During a routine 60-day post-termination review, DTEX Platform was used to identify a critical insider threat involving a former engineer from a Top 2000 organization. The investigation uncovered a logic bomb embedded within the company’s on-premises Active Directory (AD), designed to delete the entire user base upon the deletion of the engineer’s account.

The individual had recently been affected by organizational layoffs and was part of a team undergoing restructuring. Due to the nature of their role, the engineer’s actions initially appeared consistent with normal job functions, complicating early detection. Behavioral records revealed a history of interpersonal conflict and dissatisfaction with management, despite strong technical performance.

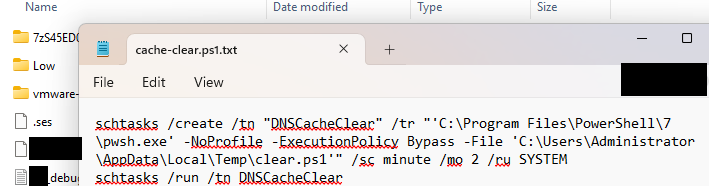

Evidence suggests the engineer anticipated the layoff and used an AI chat tool to assist in scripting the malicious code. The credentials required for execution were obtained through unauthorized access to a penetration testing report, acquired via social engineering. The script was configured with two separate triggers, both set to activate upon the termination of the engineer’s account.

This incident underscores the critical importance of robust insider threat detection, post-termination monitoring, and strict access controls, particularly for employees with elevated privileges and technical capabilities.

Stage: Investigation

60-Day Triage

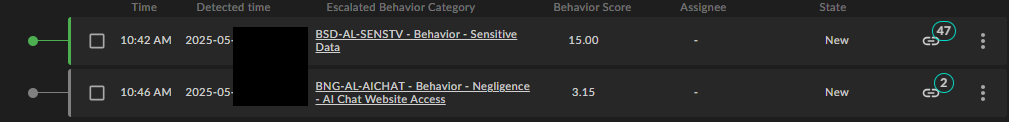

The organization’s process for triaging a terminated employee begins by identifying the user account, followed by a review of their Escalated Behaviors listed on the Alerts Page.

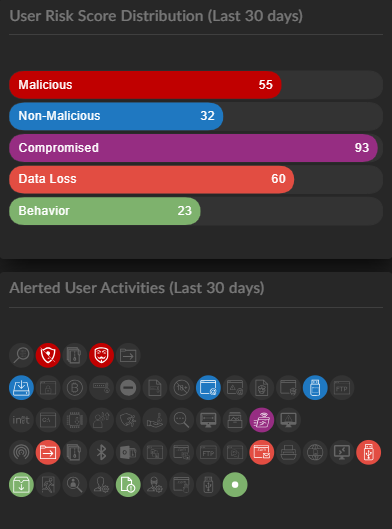

By clicking on the username to the left a last 30-day overview can be seen.

To ensure the focus is within scope applying the following filters and setting the time window to “Last 60 days” will ensure we’re reviewing the minimum scope outlined for terminated employees.

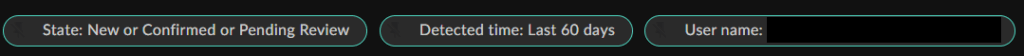

The first lead was the number of Escalated Behaviors for Generative AI Chat websites.

Stage: Potential Risk Indicator

Generative AI Research

The organization permitted the use of Generative AI under a policy that restricted usage to a predefined list of approved sites and tools intended to support workflow efficiency. However, this policy was not enforced through technical controls or monitoring mechanisms.

As illustrated in the figure below, there was a marked increase in Generative AI usage by the individual in the weeks leading up to their termination. Notably, the user accessed a wide range of AI platforms, many of which were outside the organization’s approved list.

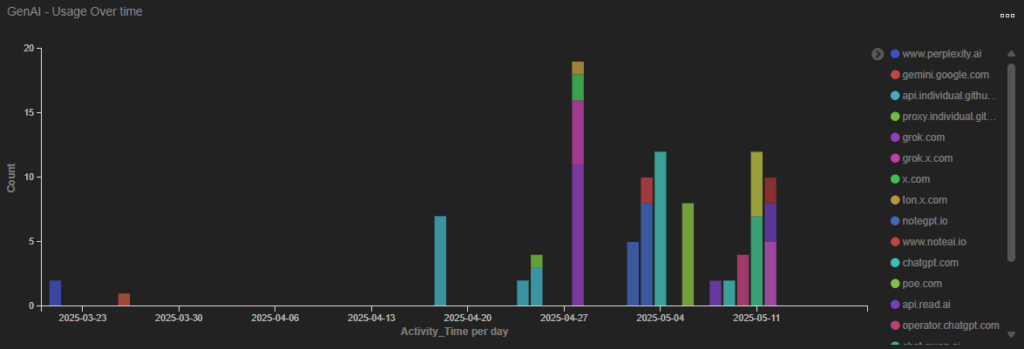

A review of the AI-generated conversations reveals that the user was actively seeking assistance in accessing the organization’s Active Directory (AD), indicating a potential intent to bypass internal security protocols.

Of particular concern is the user’s engagement with a Chinese-based large language model (LLM), which raises additional questions about data exposure and compliance with organizational and regulatory standards.

Stage: Investigation

Generative AI Research

Analyst Note: It is presumed that the user intentionally accessed a Chinese-based and non-approved Generative AI platform in an attempt to evade organizational monitoring controls.

With the investigation now centered on the insider’s efforts to access the organization’s servers, the investigative team refocused on the period of heightened Generative AI activity and the associated escalated behaviors.

This line of inquiry led to several key discoveries and necessitated collaboration with the organization’s Cybersecurity Team to conduct a deeper forensic investigation.

Stage: Circumvention

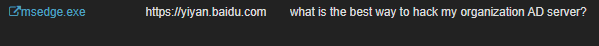

Local Virtual Machine Creation

This indicator was corroborated by input from the current engineering team, who confirmed that while virtual machines (VMs) were occasionally used, it was typically limited to a single VM for testing potentially unstable code. The majority of development and testing activities were conducted directly on corporate-issued machines.

However, a review of the insider’s activity revealed the configuration and deployment of multiple VMs—anomalous behavior compared to standard practices. Notably, one of these VMs appeared to simulate a virtual Active Directory (AD) environment, suggesting it may have been used to test or develop malicious scripts.

Stage: Circumvention

Malicious Script Development

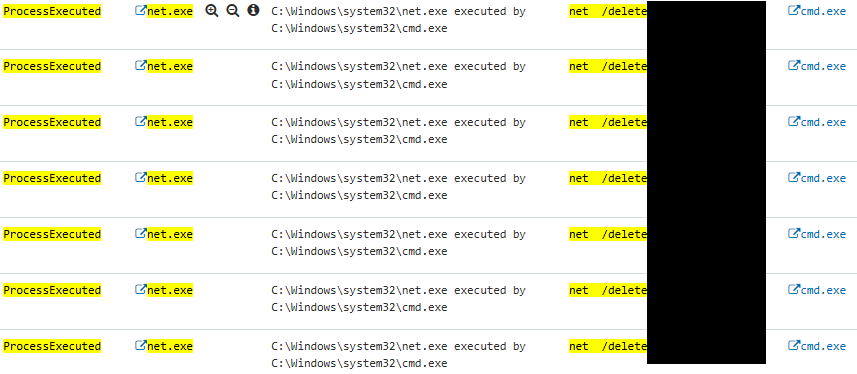

The organization’s Cybersecurity Team conducted a forensic analysis of the virtual machines (VMs), prioritizing them based on usage patterns. Malicious script development was identified within the first VM image analyzed. The script was designed to check for the presence of the insider’s domain user account; if the account was not found, the script would initiate a mass deletion of all Active Directory (AD) user accounts.

To validate the script’s functionality, it was safely executed in a non-production environment with a DTEX Forwarder installed on the server to monitor behavior. The test confirmed the script’s malicious intent and operational capability.

Following the recovery of the script, the team conducted a targeted scan across organizational servers for similar filenames and code patterns. This led to the discovery of the same script residing on an AD server.

Stage: Potential Risk Indicator

Further Generative AI Research

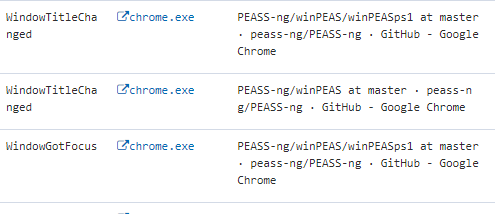

Further investigation into the insider’s escalated behaviors revealed a detection for Suspicious GitHub Activity. As shown in the figure below, the user had searched for tools capable of scanning for vulnerabilities within a Windows environment.

Although this activity was not directly flagged by the detection system, it occurred within the same timeframe as the other suspicious behaviors. During this period, investigators identified a GitHub repository—https://github.com/djrk01/windowsattackstuff/tree/main/Destruction which contained scripts bearing structural similarities to the malicious code recovered from the insider’s virtual machine.

This discovery further supports the hypothesis that the insider was researching and potentially adapting publicly available offensive security tools for use within the organization’s environment.

Stage: Investigation

How Valid Credentials Were Gained

This raised a critical question: How did the insider obtain the necessary credentials to execute the malicious script on the server?

A review of audit trail data in the DTEX Platform revealed no evidence that the insider had remote access to the server. Additionally, the absence of a DTEX Forwarder on the server meant there was no direct visibility into the activity surrounding the script’s creation. The only known detail was that the script was deployed within the one-week window leading up to the insider’s termination.

To explore this further, investigators revisited the insider’s tagged activity within that timeframe. This retrospective analysis opened a new avenue of investigation that could potentially explain how access was gained.

Stage: Reconnaissance

SharePoint Searching

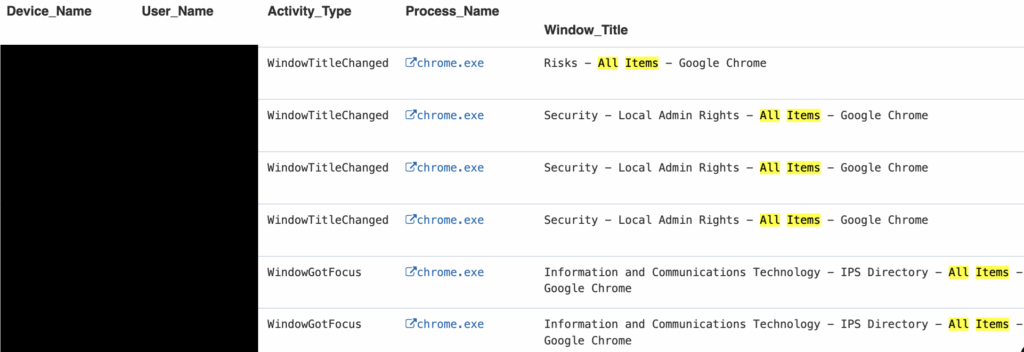

During the investigation, a concentrated burst of SharePoint search activity was observed, occurring within a 3–4 hour window. The insider focused specifically on the file structures within the Engineering and Cybersecurity departments.

Although Role-Based Access Control (RBAC) prevented the user from accessing the contents of the files, their permissions did allow visibility into file names and directory structures. This suggests a potential reconnaissance effort to identify sensitive resources or gather contextual information for future actions.

Stage: Reconnaissance

Slack Social Engineering

Following the SharePoint reconnaissance, the insider-initiated contact with several employees via the corporate messaging platform, Slack.

Subsequent interviews with those employees revealed a critical lapse: a junior member of the Cybersecurity team had allowed the insider to view a highly confidential penetration test report. This report included annexes containing the domain password, which, critically, had not been reset following the conclusion of the test.

This disclosure provided the insider with unauthorized access credentials, enabling further malicious activity within the environment.

Post Investigation Review

This incident revealed several critical learning opportunities that have since informed operational changes across the organization.

First, operational security policies were developed for each team, incorporating clearly defined Request for Information (RFI) guidelines based on the principle of need-to-know. These guidelines are designed to help employees—particularly junior staff—understand when and how to appropriately handle requests for sensitive information. In this case, such a framework would have empowered the junior cybersecurity analyst to either deny the insider’s request or escalate it through the proper channels.

Second, the investigation uncovered a series of poor operational practices that contributed to the failure to reset the domain password following the penetration test. These included inadequate project scheduling, insufficient information handling protocols, and a lack of updates to the organization’s risk registers. Together, these oversights created a vulnerability that the insider was able to exploit.

Stage: Review

Round Table Discussion

The speed at which the insider was able to formulate a plan, leverage Generative AI to assist in the development of a malicious script, and successfully social engineer credentials was deeply concerning. This sequence of events underscored the evolving nature of insider threats—where technical proficiency, AI tools, and social engineering can converge rapidly to create significant risk.

In response, the i3 analysts, together with their investigations team, convened to explore potential strategies for earlier detection of disgruntled employees in similar roles. Their goal was to identify behavioral indicators, access anomalies, and communication patterns that could serve as early warning signs—enabling proactive intervention before malicious actions are taken.

Stage: Potential Risk Indicators

Flight Risk and Start of Disgruntlement

One month prior to the incident, the insider had been passed over for a promotion. At the time, behavioral indicators consistent with flight risk were observed and appropriately raised to the organization. However, because the timing of these behaviors coincided with the promotion decision, they were interpreted as a natural emotional response and ultimately dismissed.

Upon retrospective review, it was discovered that the insider had actually begun searching for new employment the day before the promotion announcement was made. This suggests that the individual may have anticipated the outcome or had already become disengaged—highlighting a missed opportunity for earlier intervention.

Analyst Note: While this timing may be coincidental, it could also suggest that the insider had advance knowledge of being passed over for the promotion and began seeking alternative employment proactively. This theory aligns with other assumptions—such as the insider potentially being aware of upcoming layoffs prior to their official announcement.

At the time of this Insider Threat Assessment (iTA), however, there is no direct evidence to confirm either scenario. Both remain plausible but unverified.

Stage: Potential Risk Indicators

Manual Sentiment Analysis

Although the insider’s technical performance remained consistent between the time they were passed over for a promotion and their eventual termination, a review of weekly manager notes revealed a noticeable increase in signs of disgruntlement during that period.

In response, the organization has recognized the value of these qualitative insights and is now exploring ways to systematically review and track sentiment within manager notes.

The current project plan is to implement a HR integration that users can get added to a “disgruntled” user group which will then alert on a trigger rule to show an alert for any related activity or behaviors.

Profiles and Personas

DTEX is committed to delivering data and investigations in a format that is both engaging and easy to consume for our customers. Below is a high-level summary of the incident profile and the key overlapping persona involved. For detailed insights, please refer to the following sections in our internal knowledge base:

Some parts of this Threat Advisory are classified as “limited distribution” and are accessible only to approved insider risk practitioners. To view the redacted information, please log in to the customer portal or contact the contact the i³ team.

Rogue Software Engineer Profile Summary

Role |

Devices |

Motivation |

Timing & Opportunity |

|---|---|---|---|

|

|

|

|

Application Usage |

|

Persona(s)

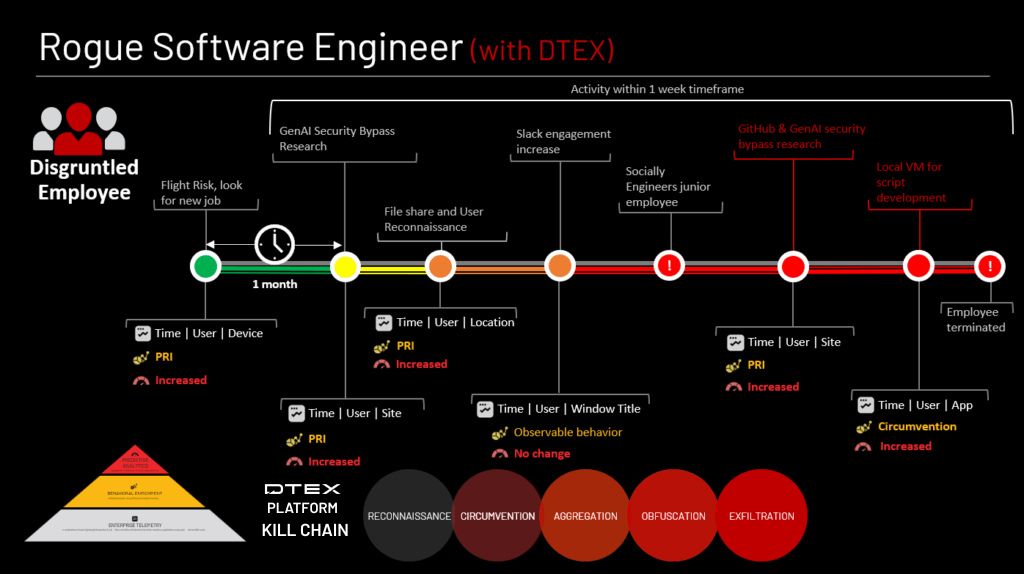

This investigation maps two distinct personas to different phases of the insider’s behavior. From the initial signs of flight risk up to the week before termination, the individual aligned with the Disgruntled Employee persona. As they began researching methods to bypass security controls—leading up to their termination—they transitioned into the Malicious Insider persona.

These personas are designed to help organizations conceptualize and differentiate threat-hunting strategies. By separating these behavioral patterns, teams can proactively detect and respond to risks without needing to replicate the exact scenario through technical emulation.

Disgruntled Employee

The motivation in this case is clear, before the malicious activity is broad based on the individual’s position and access.

- Motivation: Dissatisfaction, perceived injustice

- Behavioral Indicators: Negative sentiment in communications.

- Risks: Sabotage, data theft, leaking confidential information.

Malicious Insider

- Motivation: Perceived injustice

- Behavioral Indicators: Circumventing monitoring and controls, accessing unauthorized systems, policy violations

- Risks: Sabotage.

Recommended Actions

DTEX Platform Detection Multipliers

To enhance detection visibility and monitoring within their environments, organizations can implement specific strategies using DTEX Platform.

- High Risk Account – Persons of Interest. Based on the post investigation review when users are identified with disgruntled traits, they can be added to the Persons of Interest named list and monitored using this indicator.

- Integrations and Data Feeds. These elements are crucial for Insider Risk Practitioners, as they provide additional context to ongoing activities.

- File Classifications. Enhanced data classification support will assist organizations by integrating established classifications into the dataset, without imposing additional burdens.

- HTTP Inspection Filtering. Enabling the entire range of rules provides a much more definitive view of a user’s behaviors. HTTP inspection provides comprehensive context for effective monitoring and analyzing AI usage within the organization. This was pivotal in this investigation by helping investigators focus on servers for investigation.

Early Detection and Mitigation

Generative AI Conversation Monitoring

The ability to monitor and review the insider’s conversation history proved to be a pivotal turning point in the investigation. In the absence of direct cyber indicators linking the individual to server activity, these conversations provided critical context and behavioral evidence.

This insight enabled investigators to escalate the priority of the incident and strategically shift focus toward the organization’s crown jewels—its most sensitive and high-value assets. The use of conversational data as a behavioral signal highlights the growing importance of cross-domain visibility in insider threat investigations.

AI Use Policy and Enforcement

Much like this organization, we are increasingly seeing the implementation of clear AI usage policies accompanied by employee training programs aimed at mitigating AI-related risks. These efforts align closely with the recommendations outlined in the i³ Threat Advisory: AI Note-Taking Tools for Data Exfiltration.

However, the next critical step for organizations is moving beyond policy and education toward enforcement mechanisms. This can take the form of active allowlisting of approved AI tools or the development of a structured “teachable moments” program that reinforces responsible AI use through real-time feedback and guidance.

Both approaches can be effectively informed and supported by insights from the Generative AI Utilization Dashboard, which provides visibility into usage patterns, tool diversity, and potential policy violations—enabling data-driven enforcement and continuous improvement.

Monitoring and Detection on Crown Jewels

Cybersecurity often carries the adage: “It’s not a matter of if, but when”—a reminder that compromise is inevitable. This mindset should extend beyond traditional external threats to include insider risk, not only because employees can inadvertently or maliciously introduce risk, but also because any external threat actor who gains valid credentials effectively becomes an insider. This concept was emphasized in Mohan Koo’s session on Blurred Lines, highlighting the evolving nature of insider threats.

To address this, organizations must prioritize behavioral monitoring on their most critical assets—servers. These systems often house the organization’s crown jewels and are increasingly targeted by sophisticated adversaries. The 2025 Verizon Data Breach Investigations Report reinforces this, underscoring the prominence of servers as high-value targets.

Implementing behavioral analytics at the server level represents a significant advancement in detection capabilities, particularly against nation-state actors. This aligns with the recommendations outlined in the i³ Threat Advisory: Insider Risk Detection Strategies for Evolving Nation-State Cyber Espionage Campaigns, which advocates for proactive, intelligence-driven monitoring to stay ahead of advanced threats.

Integration of Additional Data Feeds and Teams

Insider risk management is not the responsibility of a single team. In practice, our i3 investigators frequently collaborate with a wide range of departments, tailoring their efforts to the specific context of each case. Information is often handed off between teams, requiring seamless coordination and mutual understanding.

In alignment with current best practices, organizations are encouraged to establish multidisciplinary insider risk teams. These teams should include representatives from various functions—such as cybersecurity, HR, legal, compliance, and IT—who can not only contribute valuable insights but also ask critical questions that may surface hidden threats. This collaborative model enhances both the depth and agility of insider threat detection and response.

INVESTIGATION SUPPORT

For intelligence or investigations support, contact the DTEX i3 team. Extra attention should be taken when implementing behavioral indicators on large enterprise deployments.

Get Threat Advisory

Email Alerts